Galileo Custom Metrics Campaign — Startup Simulator 3000

The story of how silly startup pitch generator that taught serious AI evaluation, drove enterprise leads, and landed talks at Databricks, O'Reilly, and NYC Tech Week.

Overview

What happens when you combine absurd startup ideas with serious AI evaluation? You get Startup Simulator 3000—a comedy multi-agent LLM app that became one of the most referenced content series at Galileo, drove multiple enterprise leads, and earned speaking slots at both the Databricks Data + AI Conference and O'Reilly's Agent Day.

This is the story of how a silly demo became a serious campaign enabling developer education, , and business impact.

The Challenge

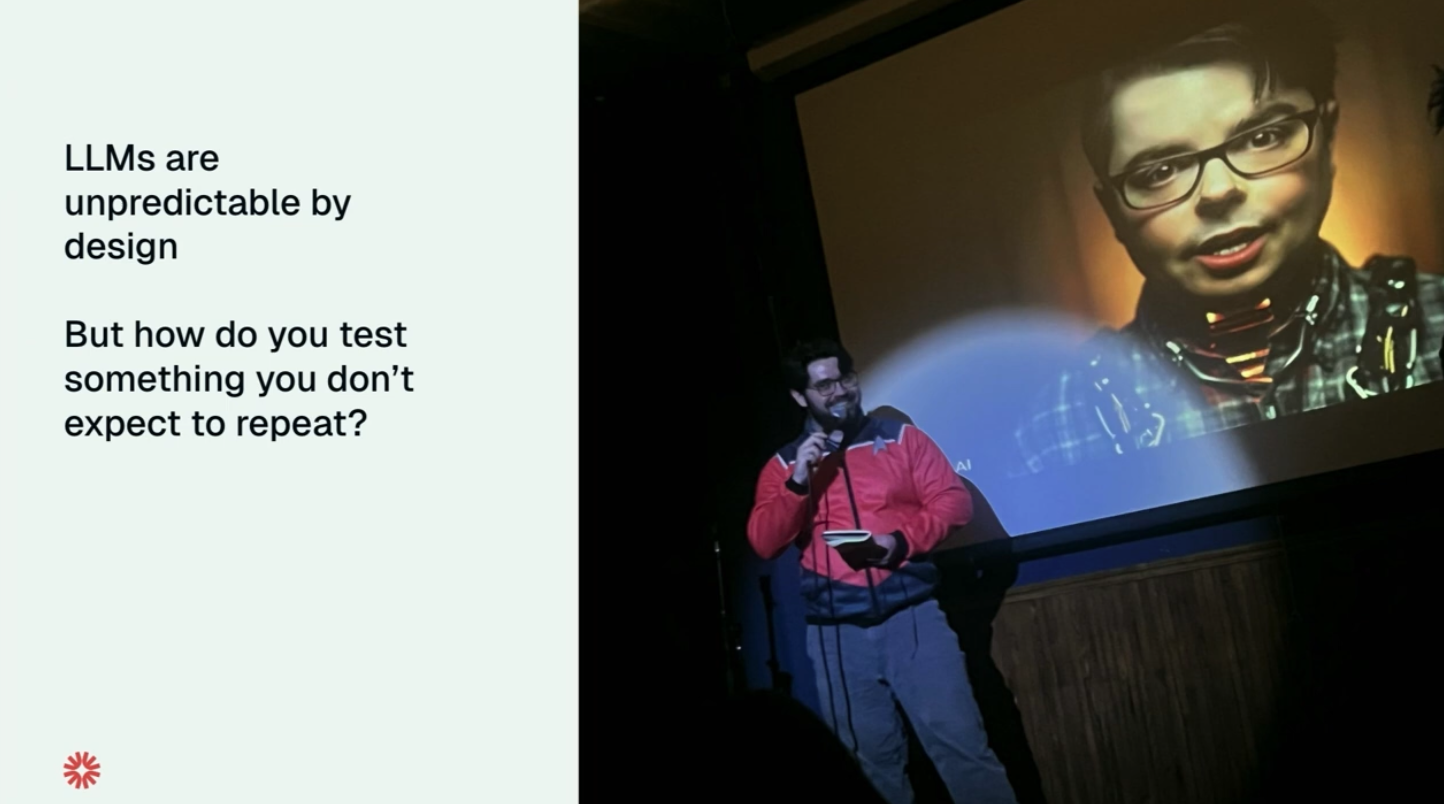

By early 2025, teams building LLM applications were hitting a wall. Generic metrics like accuracy and latency couldn't capture the nuanced, domain-specific quality many teams needed to measure. Galileo's Custom Metrics and platform could solve this problem, but adoption was low.

The issue wasn't the technology—it was the lack of practical, end-to-end examples. Developers needed to see how to design custom metrics, register them, instrument their apps, and interpret the results. They needed a reference implementation that was both technically rigorous and approachable.

More than that, they needed a demo that would stick in their minds.

The Mission

The goal was clear: in three months, conceive and ship an end-to-end developer education campaign that would:

- Build a memorable demo application that showcased custom metrics in action

- Teach the underlying techniques through hands-on tutorials and clear documentation

- Launch a multi-format content series (docs, blogs, videos, conference talks) that engaged developers across multiple touchpoints

- Generate enterprise interest and drive product adoption

This wasn't just about writing docs—it was about building something developers would want to use, talk about, and adapt for their own projects.

A Three-Part Approach

Part 1 — Inception & Prototype

The concept came together quickly: Startup Simulator 3000, a multi-agent LLM application that generates startup pitches ranging from brilliant to absurd. The twist? It would be evaluated using serious, domain-specific custom metrics.

I built the application in Python using Flask, orchestrating three LLM agents that collaborated to generate startup concepts. The architecture integrated:

- OpenAI SDK for agent orchestration and generation

- NewsAPI for lightweight market research context

- Tool-calling patterns for structured agent interactions

- Prompt engineering and guardrails to keep outputs coherent

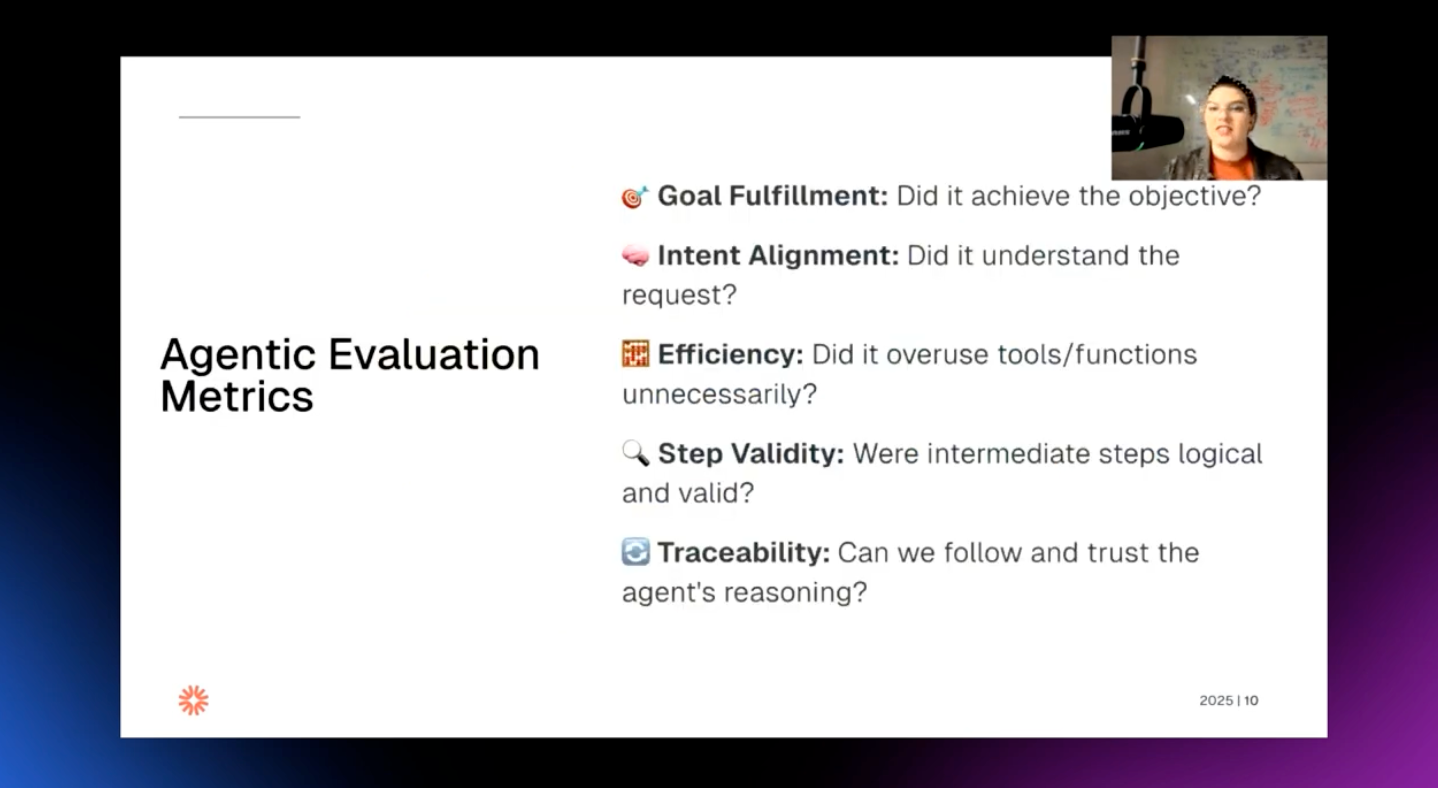

The real innovation was in the evaluation layer. I designed custom LLM-as-a-Judge rubrics tailored to the startup domain:

- Comedic Relevance: Is this application actually, funny??

- Technical Feasibility: Are the technical claims plausible and achievable?

- Satirical Relevance: Does this follow standard satirical tropes?

- Originality: Does it avoid tired tropes and clichés?

Each rubric came with detailed prompts and scoring functions. I registered these as custom metrics via the Galileo SDK and instrumented the entire application with Galileo, capturing:

- Requests and responses for every agent interaction

- Prompt templates and model version tags

- Latency, token usage, and cost per request

- Trace IDs for end-to-end observability

Part 2 — Testing & Documentation

With the prototype functional, I shifted focus to refinement and education. I ran hallway tests and design-partner reviews, gathering feedback on prompt clarity, agent roles, and metric rubrics. This led to simplified Flask endpoints, better error handling, and more nuanced scoring.

I then built the documentation and educational layer:

- Developer cookbooks and how-to guides

- Step-by-step tutorials with code samples

- Blog posts explaining why domain-specific metrics matter

- YouTube walk throughs for hands-on replication

Each asset was designed to make complex evaluation workflows feel accessible, playful, and practical.

Part 3 — Launch & Distribution

The final part was about orchestration. The content series went live across multiple platforms:

- Galileo Docs site with the full cookbook and how-to guides

- Galileo blog with narrative posts positioning the campaign

- YouTube walkthrough videos

- GitHub with the open-sourced application code

- Meetup Tour at NYC Tech Week and NYC Agent Week

- Conference talks at Databricks and O'Reilly events

Cross-team coordination ensured that:

- Sales teams had enablement resources

- Solutions engineers had another hands-on demo application

- Developers had self-serve tutorials and assets.

Post-launch, the one demo turned into an effective (and engaging) content series that was able to meet users where they're at.

Technical Depth

This campaign showcased technical breadth across the full stack:

- Python/Flask development for a production-ready demo service

- Multi-agent LLM system design with orchestrated CEO/CTO/CMO roles

- Custom LLM-as-a-Judge metrics with domain-specific rubric prompts

- OpenAI SDK integration for generation and evaluation

- Galileo SDK integration for registering and logging custom metrics

- NewsAPI integration for real-world context signals

- Observability instrumentation with Galileo Observe (traces, tags, latency, costs)

- Prompt engineering, guardrails, and evaluation harnesses to ensure quality and reproducibility

Campaign Expansiveness

The campaign spanned multiple formats and distribution channels:

- Multi-format content: in-product docs, cookbooks, how-to guides, blog posts, video walkthroughs, and conference presentations

- Cross-platform distribution: developer docs site, engineering blog, YouTube, GitHub, social media, community channels, and sales/solutions enablement

- End-to-end execution: concept → prototype → testing → documentation → launch → iteration → feedback loops

The Impact

Awareness and Education

Startup Simulator 3000 became a heavily referenced content series at Galileo. Developers finally had a concrete, hands-on example of how to design and implement domain-specific custom metrics. The content provided reusable patterns that teams could adapt to their own use cases—from ecommerce recommendation quality to medical chatbot safety.

Business Results

The campaign drove multiple enterprise leads and provided a clear proof point for sales teams. Prospects could see custom metrics in action, understand the implementation path, and envision how to apply them to their own products.

Conference Recognition

The work caught the attention of multiple major industry platforms:

- Databricks Data + AI Conference: Presented to a packed room, generating booth traffic and customer conversations

- NYC Tech Week 2025: Showcased the custom metrics approach to the NYC tech community

- NYC Agents Week 2025 with Convex: Presented the multi-agent architecture and evaluation patterns

- O'Reilly's Agent SuperStream Day: Featured as a speaker in Agentic AI evaluation, demonstrating industry-wide recognition of the content quality and technical rigor

These talks reinforced Galileo's position as a leader in AI observability and evaluation across diverse technical audiences.

Product Feedback Loop

Developers using the tutorial provided valuable feedback that informed product improvements. The campaign influenced API ergonomics for custom metrics registration and improved the UX of Galileo's metrics dashboard.

Community Adoption

The open-source repository and reusable templates led to community-driven adaptations. At meetups, I was able to see this in action, with developers cloning the repository and later adapting the rubrics to new domains (finance, healthcare, gaming).

Resources

- GitHub Repository: Startup Simulator 3000 source code

https://github.com/rungalileo/sdk-examples/tree/main/python/agent/startup-simulator-3000 - Cookbook: Custom Metric — Startup Simulator 3000

https://v2docs.galileo.ai/cookbooks/use-cases/custom-metric-startup-sim/custom-metric-startup-sim - Blog: Silly startups, serious signals

https://galileo.ai/blog/silly-startups-serious-signals-how-to-use-custom-metrics-to-measure-domain-specific-ai-success - Blog: Why generic AI evaluation fails

https://galileo.ai/blog/why-generic-ai-evaluation-fails-and-how-custom-metrics-unlock-real-world-impact - How-to Guide: Registering and using custom metrics

https://docs.galileo.ai/galileo/gen-ai-studio-products/galileo-observe/how-to/registering-and-using-custom-metrics - Conference Talk; Databricks Data + AI Summit

"Generating Laughter: Testing and Evaluating the Success of LLMs for Comedy":

https://www.youtube.com/watch?v=zn5WvgZcdMA - YouTube Video; How to choose the right evaluation metrics:

https://www.youtube.com/watch?v=bWivv9kVz3E - O'Reilly Agents Education Day; Agentic Experience Metrics with Erin Mikail Staples:

https://www.youtube.com/watch?v=LP63a53S-So